The Commodore 64 AI module isn’t just a novelty—it’s a working example of what happens when modern machine learning collides with vintage computing. At Security Fest 2025 in Gothenburg, Marek Zmysłowski and Konrad Jędrzejczyk revealed a fully functional Commodore 64 AI cartridge powered by a localized LLM trained on a staggering 400-book dataset. Forget gimmicks—this thing runs offline, draws power from the original C64, and delivers real-time responses through a user port connection.

Below are the ten key takeaways from their deep and unusually entertaining talk that’s definitely worth watching.

1. The Cartridge Looks and Feels Like It’s 1985

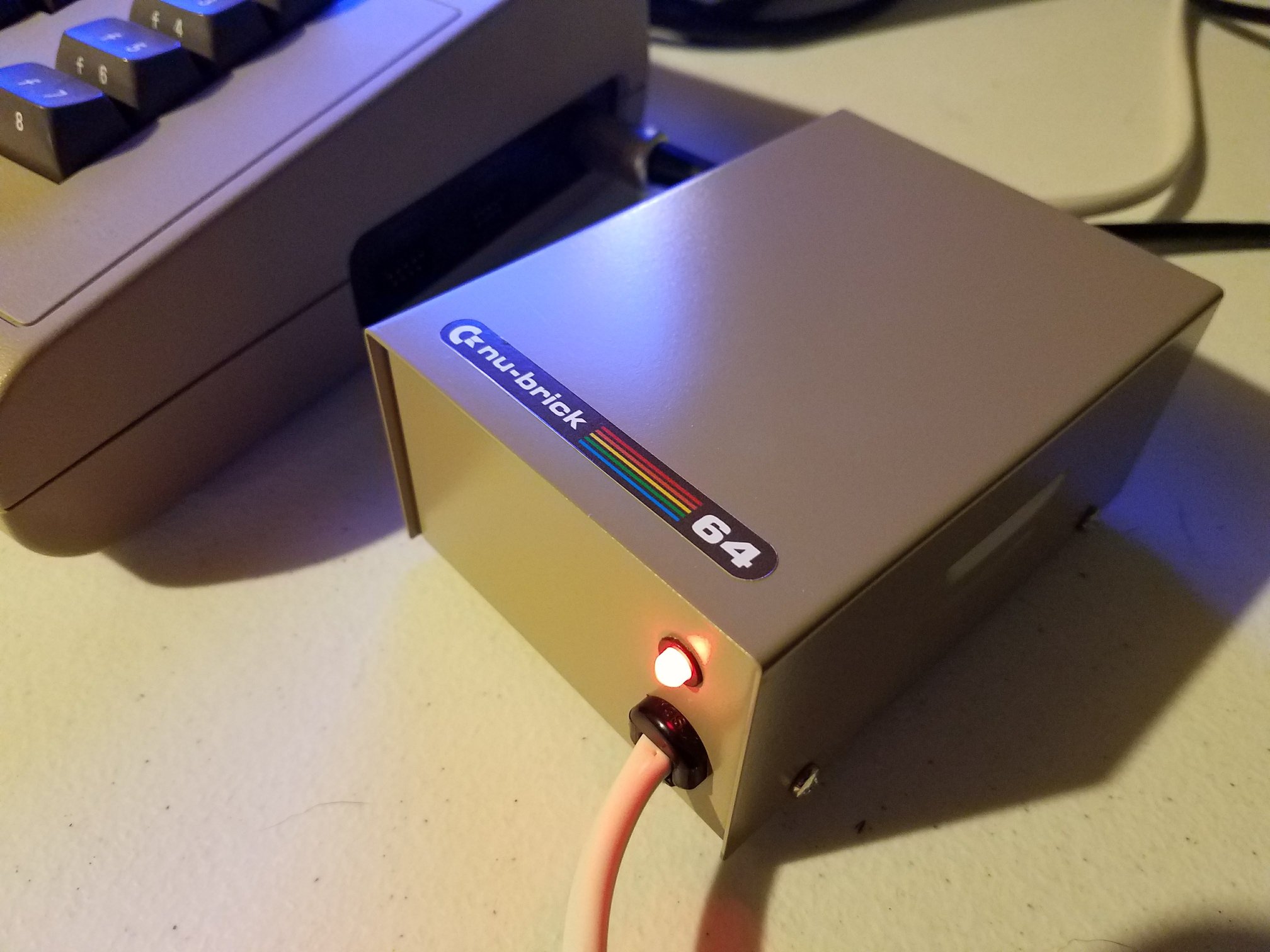

To keep things authentic, the team designed the Commodore 64 AI module as a cartridge with the exact physical and electrical specs of a vintage C64 peripheral. No mods needed. Just plug it into the user port and it runs as if it were built 40 years ago—except it happens to host a custom fine-tuned large language model.

That attention to period accuracy wasn’t just for aesthetics. They wanted it to be plug-and-play for C64 owners who still use real hardware.

2. It Requires No Cloud, No Internet, and No External Power

One of the module’s standout features is that it runs 100% offline. It doesn’t rely on cloud processing. The onboard dev board handles all inference locally. Better yet, it draws power directly from the Commodore 64—no wall warts, no USB cables, no external bricks.

This simplicity keeps the setup authentic to the original computing experience, but getting that to work took some creative engineering and efficient hardware design.

3. It’s Powered by a Miniature Linux Box

Inside the cartridge is a compact yet powerful Radxa Zero, a Linux-based single-board computer. With 8GB of RAM, it’s beefy enough to run a local LLM without overheating or browning out. It’s also obscure enough to make even the Raspberry Pi look mainstream.

This choice wasn’t about trendiness. It was one of the few boards that could meet the power, size, and processing demands—all within the classic cartridge footprint.

4. The AI Was Trained on 400 Commodore Books

Rather than fine-tune an off-the-shelf model with vague internet data, the team used a specialized dataset. They fed the model content from over 400 Commodore 64-related books, carefully extracted via OCR and processed with ChatGPT’s API.

This gave the AI an unusual edge—it speaks fluent Commodore. Ask it about SID programming, BASIC quirks, or cassette loading routines, and it responds like it was trained at a BBS in 1986.

5. They Tried TinyLlama… and It Failed

The first model tested was TinyLlama in its instruction-tuned variant. It worked in theory but choked on technical questions. The dataset was just too rich and the model too small. The responses were slow, vague, or hilariously wrong.

Rather than force it, they pivoted to a more effective approach using a technique called Retrieval Augmented Generation (RAG).

6. Retrieval Augmented Generation (RAG) Was the Real MVP

Instead of relying entirely on parametric memory, the final system uses RAG. The AI fetches relevant answers from a vector database of Commodore material at query time. It embeds questions, retrieves matching text chunks, and feeds them to the LLM for context-aware replies.

This way, even a small model can seem smart—as long as it knows where to look. The RAG system was built and tested using real Commodore documents, converted into embeddings using the GTE model.

7. Communication Happens Through the User Port

The team carefully avoided hijacking the expansion port, which many users already use for devices like the Ultimate 1541 II+ or REU memory units. Instead, they used the underutilized user port—the same one used by printers or modems in the ’80s.

This decision ensured compatibility across the Commodore 64, SX-64, C128, and VIC-20. It also kept the C64 free to run software as usual, without CPU hijacking or RAM overwrites.

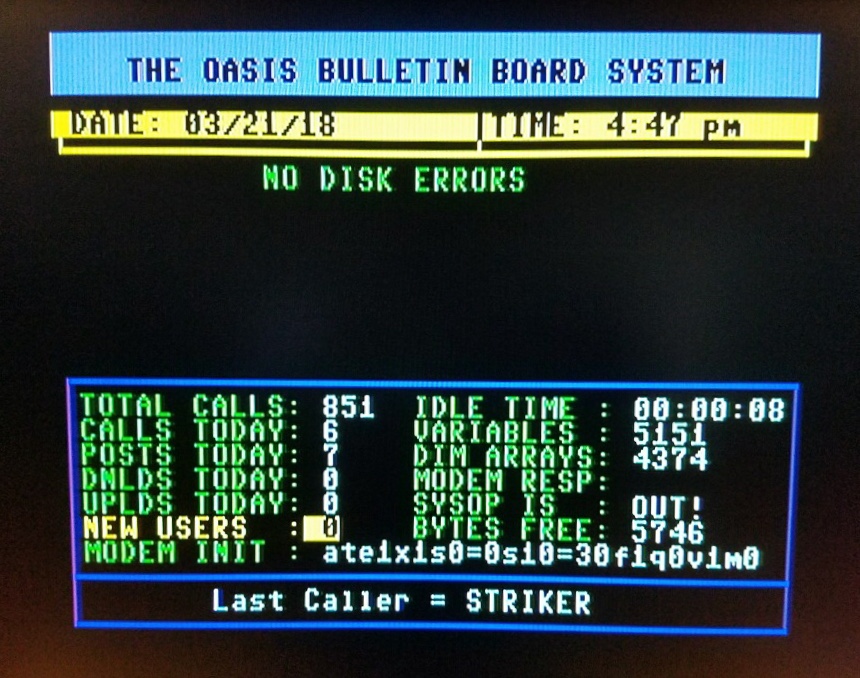

8. It Works With Period-Correct Terminal Software

The module communicates using standard serial terminal software on the Commodore 64, like Novaterm. The terminal handles input/output, and the LLM returns clean, accurate responses—even over slow baud rates like 300.

They intentionally avoided modern hacks like HDMI output or software 80-column modes. This project was built for CRTs, dot matrix printers, and floppies, not flat screens.

9. The Dev Board Nearly Melted—But They Fixed It

One challenge was power consumption. The Radxa Zero consumes up to 2.5 amps, while the C64’s user port chip maxes out at just 100 milliamps. To prevent damage, they added external power connectors and used MAX232 chips for serial-level conversion—just like in 1980s modem designs.

The first version got toasty fast. Later revisions added better cooling and smarter power routing. It’s all done with era-appropriate electronics, not modern breakout boards or jumper wires.

10. It’s Not a Toy—It’s Actually Useful

During the demo, the module responded to Commodore-specific questions with detail and precision. When asked something outside its domain, the system clearly flagged the mismatch. Users can also manage vector content, retrain the database, and pipe responses into other C64 software.

This isn’t just a proof of concept. It’s a working productivity tool—assuming your productivity involves retrocomputing, diskettes, and bitmaps.