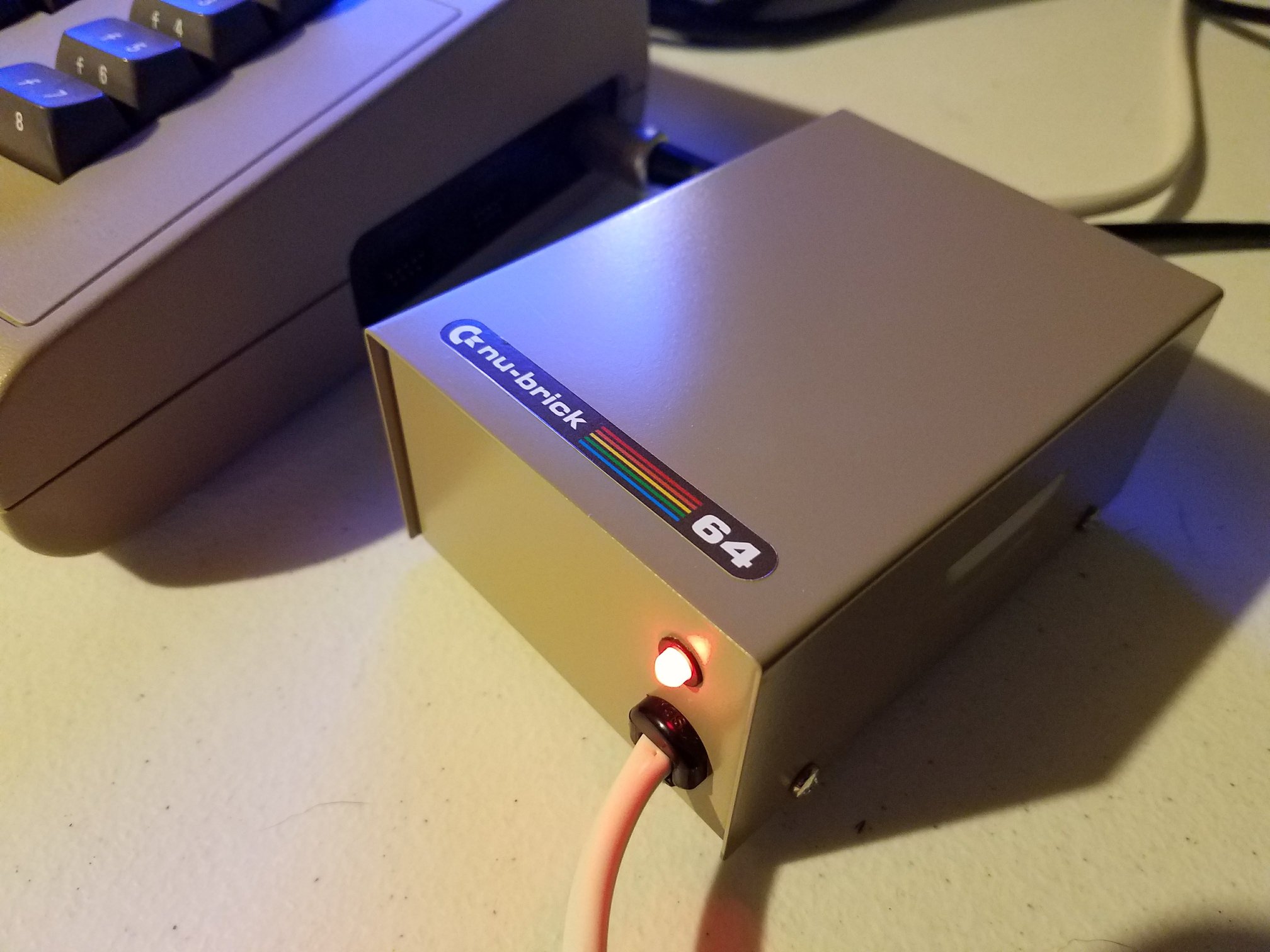

In the latest episode from Piers Rocks, the spotlight is on hand coded assembly versus compiler-generated code for a software-defined ROM running on a Commodore 64. The ROM in question replaces the KERNAL, BASIC, and character ROMs with a clever STM32F411-based solution. But the big question? Which approach produces faster, tighter code: a human writing ARM Thumb assembly, or the GCC compiler interpreting optimized C?

To find out, Piers compares three implementations: his own hand-written assembly, a naive C version, and a more optimized C version. Each is tested to determine how low the STM32 can be clocked while still delivering a glitch-free display on the C64. The results—and the reasons behind them—are both enlightening and deeply technical.

Clock Speed as the Performance Benchmark

Piers uses the minimum stable clock speed as a way to measure performance. If a given implementation can drive the display cleanly at a lower frequency, it’s more efficient.

-

Hand Coded Assembly: Required the lowest clock speed

-

Optimized C Version: Needed a moderate clock increase

-

Naive C Version: Demanded significantly higher speeds to function correctly

While raw numbers are shared, Piers focuses on why each implementation behaves the way it does—offering an invaluable look at what compilers do well, and where they still fall short.

Inside the Assembly Loop

Piers’s hand-coded loop is a masterclass in embedded optimization. He uses macros to improve readability and flexibility, preloads key register values to avoid repeated calculations, and interleaves instructions to dodge load-use penalties on the Cortex-M4 pipeline.

The logic handles a variety of ROM chip select configurations (active high, active low, mixed) while minimizing branches and memory accesses. The assembly is only 38 bytes long but tightly packed with performance tricks, like reading 16-bit GPIO states in one go and scheduling instructions to avoid stalls.

Why Interrupts Won’t Work

Many viewers might ask: why not use interrupts to serve ROM data? Piers answers this directly—interrupt latency on the Cortex-M4 is just too slow. Even best-case interrupt service routines take 12 cycles to start executing, which can cause timing violations. In this context, a tight polling loop is simply the only viable option for consistent timing.

Comparing the C Implementations

The naive C version, while logically correct, suffers from long and repetitive assembly output. The compiler can’t anticipate the hardware-specific tricks that Piers builds into his assembly. The result is a slower loop, larger code, and a higher minimum clock requirement.

The improved C version fares better. By mimicking some of the assembly-level optimizations (like XORing chip select bits and interleaving instructions), it closes the performance gap. However, redundant instructions—such as UXTH masking—still sneak in. Piers even tests whether redefining GPIO registers as 16-bit values helps. (It does—by about 2%.)

Final Reflections

While Piers admits that someone with deeper GCC-fu might squeeze out more performance, his experiment demonstrates a clear takeaway: hand coded assembly still holds its own in ultra time-critical embedded applications. It takes effort, but in cases like this—serving ROM bytes to a vintage computer bus—human optimization still outpaces machine-generated code.

This video isn’t just for retro computing nerds—it’s a fantastic tutorial on embedded performance engineering, ARM assembly, and how to reason about compiler behavior in real hardware contexts.

So whether you’re a C64 fan, an embedded developer, or just curious how much smarter a human can be than a compiler—don’t miss this one.